BC Law AI News & Insights: July 2025 Edition

In this newsletter:

- From Clinic to Chambers: A reality check on how AI is actually being used in legal practice right now—and what it means for teaching.

- The High Cost of Fake Citations: A cautionary tale on professional responsibility and the urgent need for AI literacy.

- Interview Mode: How to transform AI from a generic intern into a sharp, context-aware assistant.

- The Latest from the AI Labs: Key updates on GPT-5, Gemini, Claude, and more.

- AI News Roundup: Other noteworthy developments at the intersection of AI, law, and society.

Tim Lindgren (Assistant Director, Design Innovation, Center for Digital Innovation in Learning) and Kyle Fidalgo (Academic Technologist, Administrative and Technology Resources, Law Library) are hosting another round of the AI Test Kitchen starting on September 16. In this three-part workshop series you will learn how to leverage custom AI assistants and align them with your intentions, goals, and specific use cases. No prior AI experience is necessary to join.

All sessions will be held online on Zoom (link provided after registration) and will take place on the dates below.

- Tuesday, September 16, 12:00-1:30 pm

- Tuesday, September 23, 12:00-1:30 pm

- Tuesday, September 30, 12:00-1:30 pm

Learn more about the workshop on the dedicated resource page. You can also register directly here.

From the Clinic to the Chambers: A Reality Check on AI in Legal Practice

As generative AI continues to dominate headlines, it can be difficult to separate the hype from the on-the-ground reality. How are legal professionals really using these tools today? This newsletter focuses on several stories from recent months in an attempt to offer a grounded perspective: AI can be a powerful assistant, but for high-stakes decision making or where accuracy is paramount, it is not a replacement for foundational legal skill and human judgment.

The View from the Clinic: “A Second Set of Virtual Eyes”

In a recent Law360 article, Cornell Law professors Celia Bigoness and David Reiss reflected on a semester of using generative AI in their entrepreneurship law clinics. Their focus was narrow: how transactional lawyers might integrate these tools into their practice.

One key finding: using AI to draft documents, like company bylaws, was less efficient than starting with a quality precedent. The AI’s output required the same level of review as a junior associate’s first draft. Instead, the tools proved useful for more focused tasks:

- Identifying Gaps: Asking AI to scan contracts for missing clauses (e.g., force majeure) helped strengthen documents—with the important caveat that users still need the legal judgment to vet the suggestions.

- Summarizing & Comparing: Tasks that once took hours, like pulling and analyzing “assignment” clauses across multiple agreements, became fast and manageable.

The professors also noted a lack of “healthy skepticism” among students. Students were overly trusting of outputs from brands like Lexis and Westlaw, assuming a level of reliability that isn’t yet warranted.

Their reflections offer a grounded, real-world view for lawyers, educators, and students navigating the early stages of AI integration.

The View from the Bench: “Experimentation with Verification”

This article from MIT Technology Review highlights accounts from several judges who are beginning to experiment with various generative AI technologies while remaining keenly aware of potential pitfalls and risks.

The highlights here are a continuation of the idea that for rote tasks, or other low-stakes, low-impact work, AI use is generally acceptable. When the line becomes blurred or is unclear, they have a firm grasp on the fact that they are obligated to review AI generated output and can intervene and course correct as they go. Tasks like brainstorming questions to ask attorney’s, summarizing case material and identifying timelines and key players, and aiding in research.

The biggest concern is the accountability gap: when a lawyer makes an AI-driven error, it can be corrected; but as Judge Scott Schlegel warns, when a judge errs, “that’s the law,” and walk-backs are far more difficult.

The judges in the article co-authored a framework for how you can use AI and Generative AI responsibly as judicial officers. The framework provides insights and guidelines for ethical use. It also notes several non-negotiables such as citation verification and rigorous human review before any AI-generated output goes on the record.

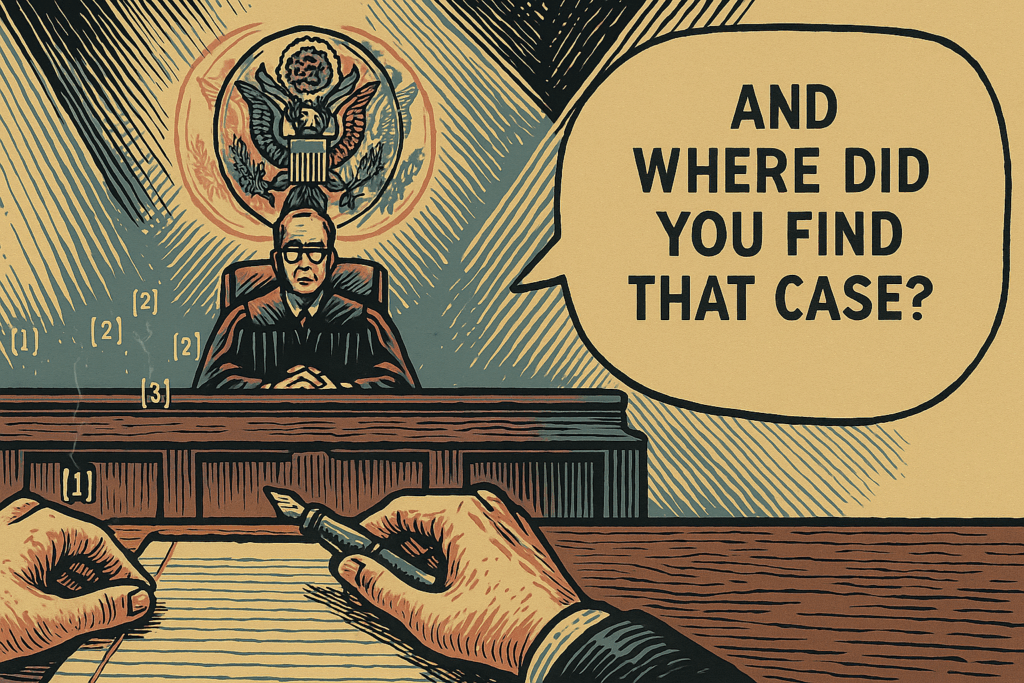

When AI Fails: The Critical Lesson of Fake Citations

For the legal profession, rigorous review of AI generated output has become a career-altering reality, making the case that AI literacy is now a core technical competency for lawyers.

Two more recent cases serve as powerful cautionary tales:

- The Ethicist’s Error: In a story ripe with irony, a partner at Goldberg Segalla, Danielle Malaty, who had previously published an article on the ethical considerations of AI, was terminated after taking responsibility for citing a fake, AI-hallucinated case in a filing. The firm stated she acted in direct violation of their AI use policy. The deeper issue, however, was a failure of process: three other attorneys, including the lead counsel, reviewed the brief and failed to catch the fictitious citation.

- A Cascade of Sanctions: In another recent case, a court ordered severe sanctions against a lawyer who cited multiple fake cases. The penalties were extensive: the counsel was kicked off the case, the brief was stricken, and the court ordered the lawyer to write letters of apology to the judges to whom she attributed the fake cases and to send a copy of the sanctions order to every judge presiding over any of her other cases. The cautionary note is a must read and an essential lesson on maintaining professional responsibility.

These incidents drive home a critical point for legal education. These failures are as much about flawed AI literacy, workflow and process as they are about flaws in the tool itself. Crucially, the core professional duty to verify sources has not been suspended by the arrival of AI. These cases create an undeniable mandate toward actively practicing the skill of verification as a non-negotiable step in the legal process.

Can AI Win in Court? A Glimpse into the Future of Legal Advocacy

New real-world experiments are beginning to test the limits of what these tools can do—from expanding access to justice for the public to augmenting the work of elite Supreme Court litigators. A recent episode of the AI and the Future of Law podcast unpacked two powerful stories that every legal professional should pay attention to.

The People’s Lawyer: An AI-Powered Appellate Win

The first story highlights AI’s potential to democratize the law. A self-represented litigant, facing a $55,000 judgment and unable to afford a lawyer, turned to ChatGPT for help. By using the AI to navigate the complexities of their housing case, they successfully appealed and had the judgment overturned.

This is a profound demonstration of how AI can serve as a force multiplier for individuals who would otherwise be locked out of the legal system. It raises critical questions for legal education: How do we prepare our students for a future where clients are also leveraging these tools? And what is our role in helping to create and regulate systems that ensure this access is both effective and responsible?

The Robot Advocate: An AI Argues Before the “Supreme Court”

On the other end of the spectrum is an experiment by Adam Unikowsky, a Supreme Court litigator who decided to see if an AI could replace him. He fed the briefs from one of his own Supreme Court cases into Claude and then prompted it to play the role of oral advocate, answering the actual questions posed by the justices during the real argument.

The results, which he detailed in his article “Automating Oral Argument,” were fascinating. In it, Adam notes that AI’s performance was not just coherent; it flawlessly recalled minute details from the record, provided direct and accurate answers, and even crafted clever responses. For example, Adam notes: “Claude gave several unusually clever answers, making arguments I didn’t think of”. He argues compellingly that courts should permit, and even encourage, experimentation with AI in oral arguments, especially for self-represented litigants.

Prompt Tip: Make the AI Interview You for Better Drafts

AI-generated drafts are often underwhelming—though sometimes that’s a prompt problem. While a generic, vague prompt will indeed produce a generic draft, with better context and iteration, results can vastly improve. Try this method to turn your chat assistant into a focussed associate that interviews you for what it needs.

The Generic Prompt (and Generic Results):

This will produce a boilerplate document that requires heavy editing and lacks client-specific nuance.

Try the “Interview Me” Prompt (for a High-Quality First Draft):

Instead, start your next drafting project with this prompt.

This method reshapes the typicalAI exchange: it forces you to clarify your thinking and grounds the AI’s output in your specific context, elevating it from a generic intern to a sharp, context-aware assistant.

Tool Updates & Other News

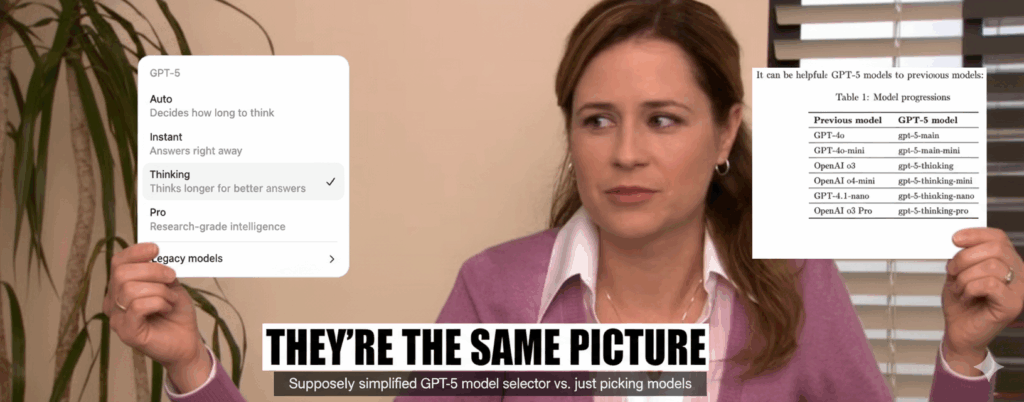

ChatGPT

OpenAI has released GPT-5, now available in ChatGPT and Microsoft Copilot. This update is part model release, part intelligent routing system. If you don’t use the model picker, ChatGPT will now choose the best model for your query. Despite the “GPT-5” label, it’s really a set of models behind the scenes:

- GPT-5 ≈ 4o, 4.1, 4.5, 4.1-mini, o4-mini, or o4-mini-high

- GPT-5 Thinking ≈ o3

- GPT-5 Pro ≈ o3-Pro ( Pro, Team and Business plans only)

For deeper dives, see Ethan Mollick and The Neuron.

Gemini

Google continues rapid updates across its AI tools:

- Learning Mode: A tutor-style interaction mode for deeper understanding

- NotebookLM: Now includes a video overview mode that generates visual presentations from source material

- Gemini 2.5 Flash Image (aka Nano Banana 🍌): Native image generation with best-in-class editing capabilities. Prompting guide available..

Claude

- Memory: Claude can now reference past chats and user preferences in any conversation.

- More Context: 1M-token context window now live via API (chat coming soon)

- Browsing Agent: Chrome extension pilot gives Claude live context and the ability to take actions on your behalf

Other AI News

- Campus AI Exchange: Interactive map that shows how different higher education institutions are developing and sharing their AI policies.

- Lexis Gen AI Tracker: Comprehensive tracking resource for federal and state court rules governing generative AI use in legal proceedings

- 6 Tenets of Postplagiarism: Dr. Sarah Eaton rethinks academic integrity in the age of generative AI

- AI in Government: In a major push for adoption, OpenAI, Anthropic, and Google are offering their enterprise AI tools to all US federal agencies for a nominal fee ($1 or less per agency) for the next year.

- Fighting “Cognitive Debt” in the Classroom: An analysis from The Neuron introduces the concept of “cognitive debt,” where relying on AI for answers can weaken learning. It advocates for a “struggle-first” principle, urging educators to have students engage with problems on their own before using AI as a thought partner.

- A Practical Guide to AI Literacy: A recent EDUCAUSE panel provides actionable strategies for educators, including creating a “syllabus menu” of AI policies and teaching frameworks like CLEAR (Concise, Logical, Explicit, Adaptive, Reflective) to improve student prompting skills.

- ChatGPT Agent Mode: ChatGPT 4o completes a homework assignment on Canvas in under 3 minutes using a basic prompt—logs in, finds the task, submits the work. Now flip it: what if you provided the content and it updated your Canvas site for you?